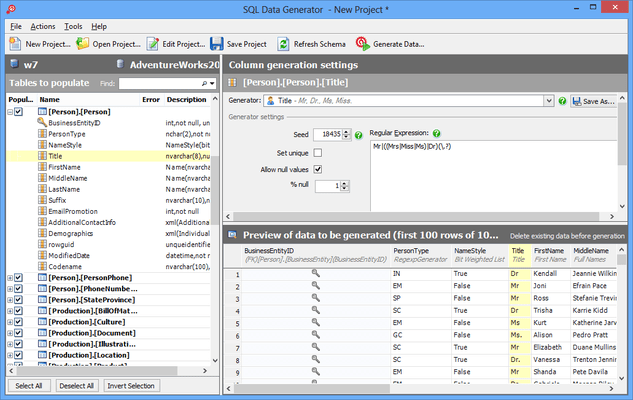

Datanamic Data Generator is a software tool to generate test data for database testing purposes. It inserts generated data directly into the database, or builds insert SQL statements. Below you can find a list of features. Data generation for multiple database platforms. Datamatrix Generator. Content type: URL Text Phone Number SMS. Content: URL: Size. Hazy synthetic data uses deep learning to understand the behavioural patterns in static and transaction data, so you retain the value not the risk. Generate business insight Break down silos and barriers to innovation.

The introduction of QR and Datamatrix codes has changed the business world. These codes have many benefits, such as they can pack lots of data into a small space. Datamatrix codes are 2D codes, and they contain different lines of data. Moreover, these systems offer a built-in error checking system. For example, if a code is damaged, you can easily detect it, and it can still be easily read. In this guide, we’ll discuss the applications and benefits of Datamatrix codes.

They’re like 2D QR codes. These codes are made up of black and white modules. Moreover, these modules are arranged in a compact square pattern. The total numbers of modules depend on the amount of data encoded in the symbol. Datamatrix symbol can store 2335 alphanumeric characters; that is impressive. These characters can include a unique serial number, manufacturer ID, and a lot more things.

These codes are easily identifiable because they’re square. Moreover, all the black and white cells are framed within the box. –L- form lines delimit these cells. Datamatrix codes have redundant information capacity. Therefore they offer high reading reliability. Furthermore, they allow reading damaged codes, and they can provide the damaged information by up to 30%.

Another interesting feature of Datamatrix codes is that they can be read in low contrast conditions. You can code 3116 ASCII characters in Datamatrix codes. However, the size of the Datamatrix codes gets increased when the size of the code increases.

It’s pretty simple and easy to use this online code generator. Go to Google.com. Paste this link https://www.datamatrix-code-generator.com/Code/ in the address bar. Just follow these steps and generate your own Datamatrix codes.

- Enter the alphanumeric or numeric information that you want to get encoded in the above-given box.

- After entering the information, click on the preview to check your Datamatrix code.

You can download the code in your desired format, such as PNG or PDF format.

Over the past few years, Datamatrix codes have become popular. They allow direct marking on components and parts. Therefore, they’re used in industrial sectors. Moreover, they help in quality control, identification, and traceability. Some common applications of Datamatrix codes are given below:

- They have become popular for marking small items, such as electronic accessories or components.

- As mentioned earlier, they can store a large amount of data in a small space, so they’re popular in pharmaceuticals industries.

- For the first time, NASA used Datamatrix codes in 1980 when they engraved these codes into parts of space rockets.

- They are good for asset tracking and data-driven applications.

- These codes are better than QR codes, but they’re used in the warehouse rather than in consumer applications.

- They can be used in personal computers, tablets, mobile phones, and consumer electronics.

Some advantages of using Datamatrix codes are:

- Higher data density. It means they occupy less space

- They have a high fault tolerance of up to 33%

- They can be read in any position from 0 to 360-degree

- Lower contrast is enough for sufficient scan readability.

You can mark these codes on parts in three ways, such as:

- Laser marking

- Engraving

- Ink printing

In the industrial sector, some devices are used for reading these codes, such as:

- Laser readers

- CCD readers

- Scanners

- Portable terminals

For consumer usage, you can find some mobile devices that can read these Datamatrix codes. If you’re already using QR code readers, you can try them because some QR readers also have the ability to read Datamatrix codes.

Both of these are 2D codes. Both these codes have some similarities and differences. Let’s explore some of them.

Similarities

- They both require a quiet zone. A quiet zone is an empty white border around the code.

- Both these codes help in data detection and decoding.

- When more data needs to be encoded, both these barcodes add more modules.

Differences

Both these codes look similar, and you can’t find the difference with the naked eye.

- The smallest possible version of QR code comprises 21 × 21 modules. On the other hand, Datamatrix code can be as small as 10 × 10 modules.

- Datamatrix codes can only hold 2335 alphanumeric characters, while QR codes can store up to 4296 alphanumeric characters.

- In Datamatrix codes, more space is available for data encoding, and they’re more compact than QR codes.

- Another significant difference between the two is Error Correction levels. Error correction is the ability to restore data when the code is damaged. QR codes have four EC levels. QR codes have a maximum EC of 30%. On the other hand, Datamatrix codes have an EC of 33%.

- Datamatrix codes are more secure and reliable than QR codes.

Based on applications, benefits, and error correction levels, Datamatrix codes are a popular and effective option.

pythonkeras 2fit_generatorlarge datasetmultiprocessingBy Afshine Amidi and Shervine AmidiMotivation

Have you ever had to load a dataset that was so memory consuming that you wished a magic trick could seamlessly take care of that? Large datasets are increasingly becoming part of our lives, as we are able to harness an ever-growing quantity of data.

We have to keep in mind that in some cases, even the most state-of-the-art configuration won't have enough memory space to process the data the way we used to do it. That is the reason why we need to find other ways to do that task efficiently. In this blog post, we are going to show you how to generate your dataset on multiple cores in real time and feed it right away to your deep learning model.

The framework used in this tutorial is the one provided by Python's high-level package Keras, which can be used on top of a GPU installation of either TensorFlow or Theano.

Tutorial

Previous situation

Before reading this article, your Keras script probably looked like this:

This article is all about changing the line loading the entire dataset at once. Indeed, this task may cause issues as all of the training samples may not be able to fit in memory at the same time.

In order to do so, let's dive into a step by step recipe that builds a data generator suited for this situation. By the way, the following code is a good skeleton to use for your own project; you can copy/paste the following pieces of code and fill the blanks accordingly.

Notations

Before getting started, let's go through a few organizational tips that are particularly useful when dealing with large datasets.

Let ID be the Python string that identifies a given sample of the dataset. A good way to keep track of samples and their labels is to adopt the following framework:

Create a dictionary called

partitionwhere you gather:- in

partition['train']a list of training IDs - in

partition['validation']a list of validation IDs

- in

Create a dictionary called

labelswhere for eachIDof the dataset, the associated label is given bylabels[ID]

For example, let's say that our training set contains id-1, id-2 and id-3 with respective labels 0, 1 and 2, with a validation set containing id-4 with label 1. In that case, the Python variables partition and labels look like

and

Also, for the sake of modularity, we will write Keras code and customized classes in separate files, so that your folder looks like

where data/ is assumed to be the folder containing your dataset.

Finally, it is good to note that the code in this tutorial is aimed at being general and minimal, so that you can easily adapt it for your own dataset.

Data generator

Now, let's go through the details of how to set the Python class DataGenerator, which will be used for real-time data feeding to your Keras model.

First, let's write the initialization function of the class. We make the latter inherit the properties of keras.utils.Sequence so that we can leverage nice functionalities such as multiprocessing.

We put as arguments relevant information about the data, such as dimension sizes (e.g. a volume of length 32 will have dim=(32,32,32)), number of channels, number of classes, batch size, or decide whether we want to shuffle our data at generation. We also store important information such as labels and the list of IDs that we wish to generate at each pass.

Here, the method on_epoch_end is triggered once at the very beginning as well as at the end of each epoch. If the shuffle parameter is set to True, we will get a new order of exploration at each pass (or just keep a linear exploration scheme otherwise).

Shuffling the order in which examples are fed to the classifier is helpful so that batches between epochs do not look alike. Doing so will eventually make our model more robust.

Another method that is core to the generation process is the one that achieves the most crucial job: producing batches of data. The private method in charge of this task is called __data_generation and takes as argument the list of IDs of the target batch.

During data generation, this code reads the NumPy array of each example from its corresponding file ID.npy.Since our code is multicore-friendly, note that you can do more complex operations instead (e.g. computations from source files) without worrying that data generation becomes a bottleneck in the training process.

Also, please note that we used Keras' keras.utils.to_categorical function to convert our numerical labels stored in y to a binary form (e.g. in a 6-class problem, the third label corresponds to [0 0 1 0 0 0]) suited for classification.

Now comes the part where we build up all these components together. Each call requests a batch index between 0 and the total number of batches, where the latter is specified in the __len__ method.

A common practice is to set this value to $$biggllfloorfrac{#textrm{ samples}}{textrm{batch size}}biggrrfloor$$ so that the model sees the training samples at most once per epoch.

Now, when the batch corresponding to a given index is called, the generator executes the __getitem__ method to generate it.

The complete code corresponding to the steps that we described in this section is shown below.

Keras script

Now, we have to modify our Keras script accordingly so that it accepts the generator that we just created.

As you can see, we called from model the fit_generator method instead of fit, where we just had to give our training generator as one of the arguments. Keras takes care of the rest!

Note that our implementation enables the use of the multiprocessing argument of fit_generator, where the number of threads specified in workers are those that generate batches in parallel. A high enough number of workers assures that CPU computations are efficiently managed, i.e. that the bottleneck is indeed the neural network's forward and backward operations on the GPU (and not data generation).

Data Generator For Mysql

Conclusion

This is it! You can now run your Keras script with the command

Data Generator Software

and you will see that during the training phase, data is generated in parallel by the CPU and then directly fed to the GPU.

You can find a complete example of this strategy on applied on a specific example on GitHub where codes of data generation as well as the Keras script are available.

You may also like...

- • Reflex-based models

- • States-based models

- • Variables-based models

- • Logic-based models

Data Generator Sql

- • Supervised learning

- • Unsupervised learning

- • Deep learning

- • Machine learning tips and tricks

Data Generator For Sql

- • Convolutional neural networks

- • Recurrent neural networks

- • Deep learning tips and tricks